Sign Language Interpreting Leaders Chart a Path for Ethical AI in Automated Interpreting

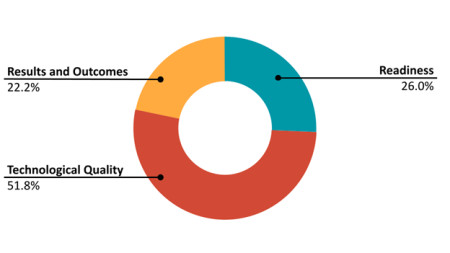

HOUSTON, February 27, 2024 (Newswire.com) - The Advisory Group on AI and Sign Language Interpreting has published a report on the results of a survey taken by users of ASL interpreting services. The findings reveal the need for regulation in three critical impact areas to ensure that any automated interpreting by artificial intelligence (AIxAI) is safe and ethical.

Read the Report.

The findings show a nearly equal emphasis on the social aspects of interpreting and the quality of technologies.

Social aspects are the norms and habits of interacting with other people that compose culture. These are often implicit and 'invisible' because – for each individual person – they are natural: it’s the way you grew up, it’s what people do, it’s how to behave in this or that situation. Language difference frequently makes these otherwise unremarkable ways of being suddenly very obvious. When do you ask questions? What do you do when you’re unsure if you’re being understood? What do you do when you know that something you said has been misunderstood?

Deaf people live with these questions as daily reality, so it’s fascinating that their discourse about automated interpreting draws so much attention to the preparedness of h/Hearing society to address the social implications of AIxAI. They are also keenly attentive to the immediate/static results (such as one-time measurements of bi-directional translation accuracy, for instance) and long-term outcomes of AIxAI as a communication medium for human beings.

To achieve fair and ethical outcomes of AIxAI, the quality of the technologies is paramount. Who will define baselines, benchmarks, and performance standards? At what point does industry experimentation and academic rigor pass the bar for shifting from test environments into production for public and private spheres? Deaf discourse in signed languages about technology-mediated communication modalities includes successes and failures as these have been experienced over the past century by generations of deaf people who have been constant targets for inventions of technology.

Learn more at the public presentation, Toward a Legal Foundation for Ubiquitous Automated Interpreting by Artificial Intelligence, on March 1, 2024, 11 am-1 pm EST. Presentation in American Sign Language (ASL); spoken English interpreting provided.

Register: https://masterword.zoom.us/webinar/register/WN_0ndPwRMHTxyhZMT5S0dycQ

Tim Riker (Brown University), Teresa Blankmeyer Burke (Gallaudet), Jeff Shaul (GoSign) and AnnMarie Killian (TDI) will share insights on the readiness of society and technology to provide automated interpreting by artificial intelligence (AIxAI) based on an analysis of deaf discourse about AIxAI. Presented by the Advisory Group on AI and Sign Language Interpreting, this qualitative study focuses on criteria for three critical impact areas that could undergird safety and fairness of AIxAI for all languages.

About the Advisory Group on AI and Sign Language Interpreting: The Advisory Group is an independent consortium of leaders and advocates for sign language interpreting across language services, industry, and academia. The AG, under the leadership of the Registry of Interpreters for the Deaf (RID - https://rid.org/ ) and the Conference of Interpreter Trainers (CIT - https://citsl.org/) has been building a partnership with the Interpreting SAFE AI Task Force for the past six months. For more information, please visit https://safeaitf.org/deafsafeai/

Source: Interpreting SAFE-AI Task Force